Snapchat AI: How close is too close?

I was startled when I first saw the AI Bot at the top of my Snapchat list. “Why does a messaging app need to integrate artificial intelligence into its program?” I asked myself. I was initially scared of an AI software being so closely connected to my personal life. It felt as though Snapchat was watching who I was talking to and what I was watching, constantly learning more and more about who I am as a human being. After a few hours of skepticism and genuine worry about giving it my data, I decided to try the bot out.

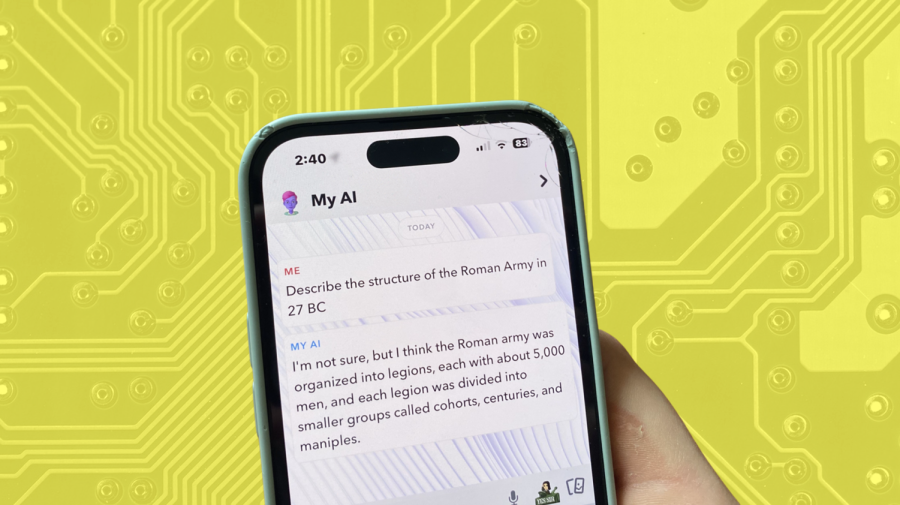

The new software titled “My AI” is analogous to ChatGPT but with a seemingly human tone of voice. The software can answer many questions, like “Describe the structure of the Roman Army in 27 BC” or “What are the fundamental principles of Marxist thought.” But, if I ask if it thinks Marxist ideals were a good idea, it spits back an answer emphasizing that its code cannot generate an opinion. Like ChatGPT, the coding embedded into the AI software is limited to what is published online. Additionally, it can analyze pictures it receives. I sent it various pictures to see its range of responses, and although not entirely accurate, the bot does a good job at responding to different situations. What’s especially creepy about this particular software is how quickly the answers are generated and the human-like style in which the answers are written. Snapchat intentionally designed this software to act like a friend, which seems like a breach of privacy to me. Unlike ChatGPT, where you need to log into a browser separate from all your other work, the Snapchat AI Bot is centralized in the conversation hub of the app.

The AI Bot sits pinned at the top of the friends feed – the place where you send pictures and message your friends. You cannot delete, unpin or turn it off. I pondered why Snapchat made this program permanent but also in an obvious location to use it. After interacting with the software for around twenty minutes, a scary thought dawned on me.

Children in this modern generation are being exposed to a vast range of screens and technologies. If certain companies, like Apple or Samsung, begin to integrate more and more AI into their software, children will be interacting with this artificial intelligence, unable to fully process that it may not be 100% accurate/truthful. It has the potential to create false realities for some people who may learn to lean on, both at a young age and further into adulthood. Snapchat is a perfect example.

The Snapchat age requirement is only thirteen years old. No thirteen-year-old that I know fully understands how artificial intelligence works. Since it is based on machine learning, the more you interact with it, the more accurate it gets with its responses. There are going to be some kids, and even adults too, who seek attention and emotional support from this AI software. The instantaneous responses linked with the friendly tone of voice can provide the perfect outlet for people who are lonely and need someone to talk to. I can see how one could make the argument that it could develop social speaking skills or provide support to those who feel alone, but I firmly believe that creating an AI tool that acts as a “friend” (Snapchat’s name for users on their apps) is a slippery slope. Society is becoming machine-dominant faster than ever before. Still, do we want to live in a society where machines can provide emotional support and advice only humans can truly comprehend?

Overall, the increase of AI in our daily lives has proved valuable and efficient for monotonous tasks. I am in favor of using ChatGPT to help solve unknown questions or think through the best way of solving something. I utilize ChatGPT when I need to quickly memorize a list or want a condensed summary of some reading. But, I want AI to stay as far away from me as possible regarding my personal life. Even Spotify launching their artificially intelligent DJ system is too close for comfort. It’s an eerie thing to unsuccessfully identify a robot’s voice from a human’s, and both of these companies have done so successfully. It makes me wonder what’s next in our technologically advanced world and the potential unforeseen consequences that await.

Nick E • Apr 28, 2023 at 10:51 am

So great Zach! Love this article. Keep up the great work!